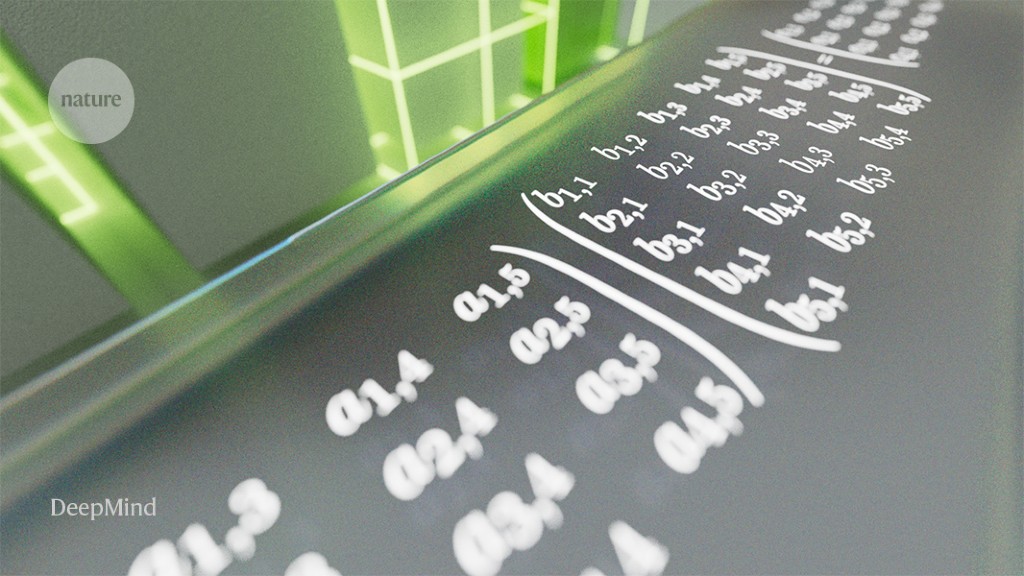

AlphaTensor was designed to perform matrix multiplications, but the same approach could be used to tackle other mathematical challenges.Credit: DeepMind

Researchers at DeepMind in London have shown that artificial intelligence (AI) can find shortcuts in a fundamental type of mathematical calculation, by turning the problem into a game and then leveraging the machine-learning techniques that another of the company’s AIs used to beat human players in games such as Go and chess.

The AI discovered algorithms that break decades-old records for computational efficiency, and the team’s findings, published on 5 October in Nature1, could open up new paths to faster computing in some fields.

“It is very impressive,” says Martina Seidl, a computer scientist at Johannes Kepler University in Linz, Austria. “This work demonstrates the potential of using machine learning for solving hard mathematical problems.”

Algorithms chasing algorithms

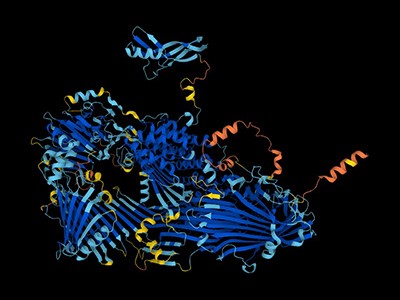

Advances in machine learning have allowed researchers to develop AIs that generate language, predict the shapes of proteins2 or detect hackers. Increasingly, scientists are turning the technology back on itself, using machine learning to improve its own underlying algorithms.

‘The entire protein universe’: AI predicts shape of nearly every known protein

The AI that DeepMind developed — called AlphaTensor — was designed to perform a type of calculation called matrix multiplication. This involves multiplying numbers arranged in grids — or matrices — that might represent sets of pixels in images, air conditions in a weather model or the internal workings of an artificial neural network. To multiply two matrices together, the mathematician must multiply individual numbers and add them in specific ways to produce a new matrix. In 1969, mathematician Volker Strassen found a way to multiply a pair of 2 × 2 matrices using only seven multiplications3, rather than eight, prompting other researchers to search for more such tricks.

DeepMind’s approach uses a form of machine learning called reinforcement learning, in which an AI ‘agent’ (often a neural network) learns to interact with its environment to achieve a multistep goal, such as winning a board game. If it does well, the agent is reinforced — its internal parameters are updated to make future success more likely.

AlphaTensor also incorporates a game-playing method called tree search, in which the AI explores the outcomes of branching possibilities while planning its next action. In choosing which paths to prioritize during tree search, it asks a neural network to predict the most promising actions at each step. While the agent is still learning, it uses the outcomes of its games as feedback to hone the neural network, which further improves the tree search, providing more successes to learn from.

Each game is a one-player puzzle that starts with a 3D tensor — a grid of numbers — filled in correctly. AlphaTensor aims to get all the numbers to zero in the fewest steps, selecting from a collection of allowable moves. Each move represents a calculation that, when inverted, combines entries from the first two matrices to create an entry in the output matrix. The game is difficult, because at each step the agent might need to select from trillions of moves. “Formulating the space of algorithmic discovery is very intricate,” co-author Hussein Fawzi, a computer scientist at DeepMind, said at a press briefing, but “even harder is, how can we navigate in this space”.

To give AlphaTensor a leg up during training, the researchers showed it some examples of successful games, so that it wouldn’t be starting from scratch. And because the order of actions doesn’t matter, when it found a successful series of moves, they also presented a reordering of those moves as an example for it to learn from.

Efficient calculations

The researchers tested the system on input matrices up to 5 × 5. In many cases, AlphaTensor rediscovered shortcuts that had been devised by Strassen and other mathematicians, but in others it broke new ground. When multiplying a 4 × 5 matrix by a 5 × 5 matrix, for example, the previous best algorithm required 80 individual multiplications. AlphaTensor uncovered an algorithm that needed only 76.

“It has got this amazing intuition by playing these games,” said Pushmeet Kohli, a computer scientist at DeepMind, during the press briefing. Fawzi tells Nature that “AlphaTensor embeds no human intuition about matrix multiplication”, so “the agent in some sense needs to build its own knowledge about the problem from scratch”.

DeepMind’s AI helps untangle the mathematics of knots

The researchers tackled larger matrix multiplications by creating a meta-algorithm that first breaks problems down into smaller ones. When crossing an 11 × 12 and a 12 × 12 matrix, their method reduced the number of required multiplications from 1,022 to 990.

AlphaTensor can also optimize matrix multiplication for specific hardware. The team trained the agent on two different processors, reinforcing it not only when it took fewer actions but also when it reduced runtime. In many cases, the AI sped up matrix multiplications by several per cent compared with previous algorithms. And sometimes the fastest algorithms on one processor were not the fastest on the other.

The same general approach could have applications in other kinds of mathematical operation, the researchers say, such as decomposing complex waves or other mathematical objects into simpler ones. “This development would be very exciting if it can be used in practice,” says Virginia Vassilevska Williams, a computer scientist at Massachusetts Institute of Technology in Cambridge. “A boost in performance would improve a lot of applications.”

Grey Ballard, a computer scientist at Wake Forest University in Winston-Salem, North Carolina, sees potential for future human–computer collaborations. “While we may be able to push the boundaries a little further with this computational approach,” he says, “I’m excited for theoretical researchers to start analysing the new algorithms they’ve found to find clues for where to search for the next breakthrough.”

More News

Author Correction: Stepwise activation of a metabotropic glutamate receptor – Nature

Changing rainforest to plantations shifts tropical food webs

Streamlined skull helps foxes take a nosedive