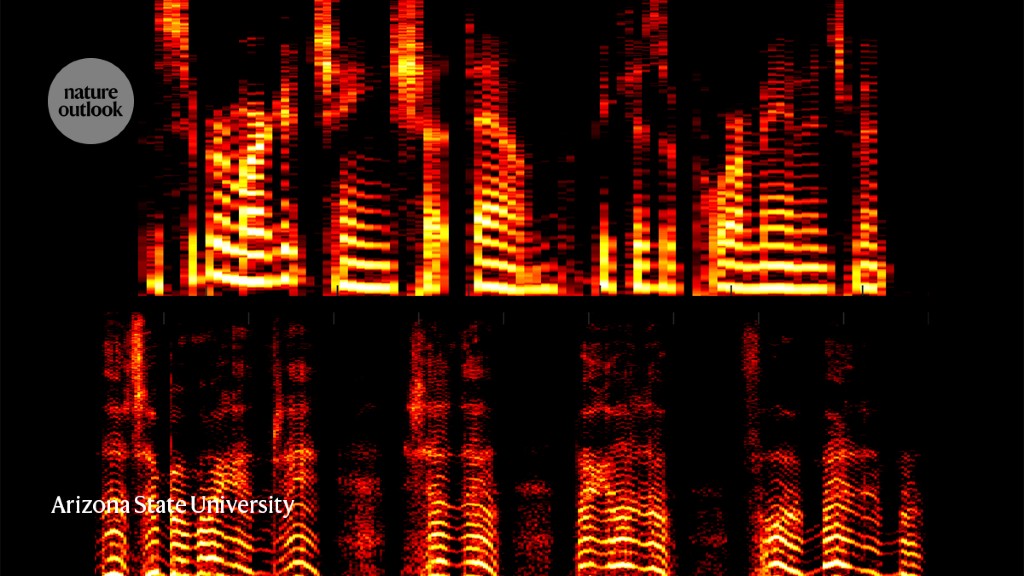

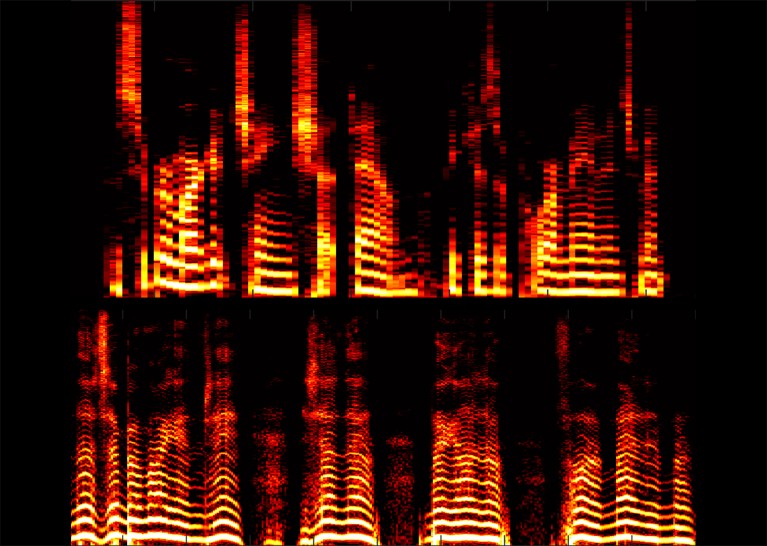

Spectrograms show the speech patterns of a healthy person (top) and an individual with motor neuron disease (bottom). Credit: Arizona State Univ.

Aural Analytics in Scottsdale, Arizona, spun off from Arizona State University, Tempe, in 2018.

Subtle changes in a person’s speech — longer pauses, over-reliance on filler words such as ‘um’ or an altered vocal timbre, for instance — don’t generally draw much attention. But these seemingly benign changes in how we talk can herald hidden neurological damage.

Read more about The Spinoff Prize

“Those relationships are pretty well documented in the literature,” says Julie Liss, a speech pathologist at Arizona State University in Tempe. She thinks audible signs such as these could give clinicians an opportunity to gain an advantage for conditions such as Alzheimer’s disease. “We’ve done retrospective studies to show that there’s signal in speech in those early pre-diagnostic days — on the order of years earlier,” she says, referring to studies analysing the speech of former US president Ronald Reagan1 and boxer Muhammad Ali2.

Liss and her colleague at Arizona State, Visar Berisha, have co-founded Aural Analytics in Scottsdale, Arizona, which has been longlisted for The Spinoff Prize 2023. The company aims to commercialize algorithmic tools that can identify hidden signatures of speech pathology that are correlated with various neurological diseases or injuries.

The field has generated a lot of excitement, but has also raised concerns about data reproducibility. “We did an extensive systematic review of the field a few years ago, and it was almost impossible to compare studies because there were no standards,” says Saturnino Luz, referring to a 2020 paper3. Luz studies vocal patterns linked to Alzheimer’s disease at the University of Edinburgh, UK, and is not involved with the start-up company. Mindful of this issue, the Aural Analytics team is using evaluation tools already used by speech pathologists to systematically test the motor, cognitive and other processes associated with oral communication.

Part of Nature Outlook: The Spinoff Prize 2023

Berisha — an electrical engineer who specializes in computational tools for speech processing and analysis, and who is chief analytics officer at Aural Analytics — is also cautious about relying too heavily on artificial-intelligence strategies. These are prone to misclassifying people based on non-disease-related patterns in the data used to train the algorithm. Such tools, he says, are “sensitive to all sorts of different confounding factors, like how tired you are or your dialect”. Instead, Aural Analytics uses more conventional statistical analysis methods and published models for assessing the data.

A role in clinical trials

Although there are strong indications that speech can reveal neurological impairment, Luz thinks that the field is a long way from making diagnoses of specific conditions based on these data. But he sees other near-term uses for speech-analytics software, including screening and monitoring the neurological health of clinical-trial participants. Berisha describes this as a current priority; in February, the company announced that its technology had been successfully deployed as a tool for evaluating speech-based biomarkers in a clinical trial of a therapy for motor neuron disease (amyotrophic lateral sclerosis) conducted by researchers at Massachusetts General Hospital in Boston.

Liss, chief clinical officer at Aural Analytics, also sees opportunities to help physicians monitor their patients more effectively. “Before your annual physical, you could go into your patient portal and you provide your speech sample,” she says. And even if the person is struggling to find the words to explain how they’re feeling, the way in which they express themselves could still provide a meaningful picture of their neurological health.

More News

How artificial intelligence is helping Ghana plan for a renewable energy future

Editorial Expression of Concern: Leptin stimulates fatty-acid oxidation by activating AMP-activated protein kinase – Nature

Quantum control of a cat qubit with bit-flip times exceeding ten seconds – Nature